My ROSCON 2024 digest - Days 2 and 3

This year, our neighbor city Odense held the ROSCON conference, which I had the honour to attend ![]() I collected some notes for my future self (and maybe the present you?), that I have collected in this post for days 2 and 3,

and on a previous post for day 1 (which consisted of workshops and birds of feather sessions).

I collected some notes for my future self (and maybe the present you?), that I have collected in this post for days 2 and 3,

and on a previous post for day 1 (which consisted of workshops and birds of feather sessions).

If any of these notes raises your interest, you can watch the full talks in this link. Enjoy!

- Simulation and AI Tools

- Data Management

- Communication Protocols and Middleware

- Diagnosis and Performance Monitoring

-

Localization, Navigation, Motion Planning and Collision Avoidance

- Learn Probabilistic Robotics with ROS 2

- Beluga AMCL: a modern Monte Carlo Localization implementation for ROS

- A ROS2 Package for Dynamic Collision Avoidance Based on On-Board Proximity Sensors for Human-Robot Close Interaction

- GSplines: Generalized Splines for Motion Optimization and Smoot Collision Avoidance

- Nav2 Docking

- Radar Tracks for Path Planning in the presence of Dynamic Obstacles

- Mesh navigation

- Software and Tool Integration

- Robotics Education and Community Resources

- Other Interesting tools

Simulation and AI Tools

Robotec.ai: Simulation and AI tools

Robotec.ai introduced us to their simulator. It integrates seamlessly with ROS (though it can function independently as well), utilizing NVIDIA’s Physx engine, and the renderings from Open 3D Engine. Essentially, Robotec.ai has migrated the ROS stack from Gazebo to O3DE, enhancing both the visual and functional capabilities of simulations.

The simulator is modular, allowing components like the physics engine to be swapped out based on specific project needs. While optimized for ROS, the simulator’s modular nature allows it to adapt to various robotic frameworks, offering flexibility for different use cases.

Robotec.ai provides tailored services to set up simulators and simulation environments suited to the customer’s specific requirements.

Later in the conference, a talk from Michal Pelka showed us how easy it is to set up O3DE for simulation:

USBL Simulator

One of my quick notes includes this repo to an USBL simulator. The simulator is written in C++, with a ROS 2 node acting as wrapper for it.

The simulator promisis a high-fidelity simulation, with features including (but not limited to) Round-trip-time (RTT) and Time-Difference-of-Arrival (TDOA) noise and quantization simulation.

The parameters are configured in a YAML file, and the repository includes an example config file for an OEM USBL by Evologics.

Automated testing framework with AWSIM and OpenScenario

AWSIM is an Open source simulator for self-driving vehicles based in Unity.

AWSIM is an Open source simulator for self-driving vehicles based in Unity.

Data Management

Segments.ai

Segments.ai is a company located in Belgium. They provide tools for data labelling in 2D and 3D, and an API to export it to whichever framework you use (e.g. Pytorch). They also provide data labelling service. Their labels seem to be focused on object detection and segmentation in 2D and 3D (as you might have guessed by the name). Currently, it doesn’t appear that they support localization directly, though I plan to explore this further through their demo offerings. Segments.ai offers free academic licenses and demo datasets.

Roboto.ai

Roboto.ai provides a platform for curating and analyze robotics data.

With Roboto.ai, you can extract specific data from large datasets, making it especially useful for working with rosbags. Instead of handling one massive file, you can query only the data you need, optimizing memory usage. The platform also allows you to curate datasets based on events or anomalies detected within the data. Roboto’s API includes built-in functions for automatic anomaly detection, streamlining data analysis and cleanup. More info on the concepts and functionality can be found here, or in the Github repo.

Foxglove

Foxglove is a well-known tool for visuallizing and debugging data. The data can be streamed from the cloud, local files, or in real time from the robot. Foxglove also allows replaying the data, (read here) (or here). One of Foxglove’s standout features is its ability to display multiple data sources simultaneously, offering flexible, customizable visualizations. For instance, it can look something like this:

…but it doesn’t have to—Foxglove is fully customizable! By the way, the sample above showcases our SubPipe dataset! ![]() Foxglove has a free plan, and provides free access to students and academics.

Foxglove has a free plan, and provides free access to students and academics.

Communication Protocols and Middleware

Zenoh

Zenoh made a bold entrance at this year’s ROSCon with a strong marketing push to position itself as the next standard in robotics middleware, potentially overtaking DDS. While choosing between these options requires more than a quick discussion or blog post, if you’re undecisive, you might like to know that Zenoh offers a bridge to ROS 2 DDS. This bridge allows you to integrate Zenoh’s capabilities alongside DDS without needing to replace the existing setup, giving you the flexibility to leverage Zenoh’s features while maintaining DDS compatibility.

eProsima

eProsima is well-known for providing the most adopted DDS solution as of today.Although their ROS 2 implementation of Fast DDS has faced criticism for some networking issues, it’s worth noting (as their CEO emphasized to us) that these issues have been addressed in recent updates.

In addition to their popular open-source libraries, eProsima offers commercial solutions, including specialized plugins for low-bandwidth connections, catering to diverse and demanding network requirements.

Pixi package manager

Pixi is a package manager that works seamlessly with Ubuntu, MacOS, and Windows. There are examples on setting Python (as alternative to conda) and C++ packages.

Here’s an example package on how to use pixi for a ROS2 workspace.

Diagnosis and Performance Monitoring

How is my robot? - On the state of ROS diagnostics

Christian Henkel introduced to as a series of ROS packages for diagnosis from the ROS diagnostics stack. Aside from that, he presented us a series of good practices that his team at Bosch applies for diagnosis:

-

Diagnosis phylosophy

- Main Purpose: Observe the current state of the robot.

- Think of it as a control panel where operators has all the information they need.

- Try to limit the metrics to <10, ideally 2-3 per component.

- Warnings are states that are unusual but allow continued operation.

- Errors indicate states that do not allow the robot to operate further and shall be immediately addressed.

- Think about a logging and diagnostics concept in your team and document it.

-

Comparison to other concepts

- Diagnostics vs:

- Logging:

- Logging is (a lot) more verbose.

- Captures the inner state of a SW component.

- Are (usually) for later consumption and analysis.

- Bagfiles:

- Are useful to record diagnostics in bagfiles.

- Will also contain non-critical state info.

- Testing:

- Diagnostics help to find more quickly causes for failing tests, but don’t replace testing.

- Crucial diagnostics may be tested themselves.

- Logging:

- Diagnostics vs:

-

Antipatterns

- In general, diagnosis are not meant to be used functionally:

- The error handling that a robotic system does by itself should not depend on diagnosis.

- Diagnostics should help a human observer or technician to understand a problem that was not recovered from.

- The “right” amount of red:

- Diagnostics must be tuned in a way such that they really mean a problem.

- Otherwise, human observers get used to seeing error messages and don’t recognize critical ones.

- In a similar theme, warnings should not be too frequent to not become meaningless.

- Diagnosis must be received:

- Diagnostics are meant as a communication method from robot to human.

- So, in fully autonomous systems, they must be logged correctly and evaluated retroactively.

- It is also worth to difference between roles:

- For example, if an end user will see and/or understand diagnostics content or

- if it must only be consumed by the trained technician.

- In general, diagnosis are not meant to be used functionally:

Scenic: a probabilistic language for world modelling

Scenic is a stochastic scenario generator and a powerful language for defining spatio-temporal relationships in scenes. Whether you’re creating environments for CARLA, Gazebo, Unity, or Webots, Scenic can model scenarios as a combination of scene distributions and agent behaviors.

Why is it useful? Scenic helps test cyber-physical systems against rare edge cases, design environments for exploration, and generate synthetic data for robust machine learning models. While it’s not available for Unreal Engine yet, its versatility makes it a game-changer for simulation and testing.

Why is it useful? Scenic helps test cyber-physical systems against rare edge cases, design environments for exploration, and generate synthetic data for robust machine learning models. While it’s not available for Unreal Engine yet, its versatility makes it a game-changer for simulation and testing.

Scenario Execution for Robotics: A generic, backend-agnostic library for running reproducible robotics experiments and tests

Scenario execution for robotics is a backend - and a middleware - agnostic library, that enables the robotics community to perform reproducible

experiments at scale. Written in Python and built upon the generic scenario descrition language OpenScenario2 and pytrees, Scenario Execution

reads a scenario definition from a file, translates it to a pytrees behavior tree, and then executes it. Although scenario execution can be used as a

pure Python library, it is mainly targeted towards ROS 2. The backend-agnostic implementation allows Scenario Execution to be used with both simulated

and physical robots, with minimal adaptations necessary in the scenario description file.

Scenario execution for robotics is a backend - and a middleware - agnostic library, that enables the robotics community to perform reproducible

experiments at scale. Written in Python and built upon the generic scenario descrition language OpenScenario2 and pytrees, Scenario Execution

reads a scenario definition from a file, translates it to a pytrees behavior tree, and then executes it. Although scenario execution can be used as a

pure Python library, it is mainly targeted towards ROS 2. The backend-agnostic implementation allows Scenario Execution to be used with both simulated

and physical robots, with minimal adaptations necessary in the scenario description file.

This tool allows testing the robot in execution time. It allows testing of various actions in parallel, as well as error injection.

With Scenario Execution, you create a logical scenario to reduce the amount of possible scenarios within your abstract scenario.

This concrete scenario will have specific values to test:

Localization, Navigation, Motion Planning and Collision Avoidance

Learn Probabilistic Robotics with ROS 2

If you work in robotics, it is extremely likely that you’ve heard about the Probabilistic Robotics book. There are plenty of repositories in the literature implementing such algorithms, however, as a developer you might want to code your own implementation that is adapted to your needs.

However, as addressed by Carlos Argueta, learning these algorithms is challenging, specially when it comes to translating theory into actual code (and making it work in the real world).

Under this repo, you can find a compilation of open-source implementations of the algorithms under the probabilistic robotics book, accompanied by an article explaining them.

Beluga AMCL: a modern Monte Carlo Localization implementation for ROS

In this talk, Franco Cipollone first introduced Beluga, a toolkit for Monte Carlo Localization (MCL) which operates in 2D over laser scan measurements. He mentioned, however, how the ACML algorithms are aging:

Under that premise, he introduced a new library EKUMEN is working on: the Localization And Mapping BenchmarKINg Toolkit (LAMBKIN)

Lambkin introduces a benchmarking application for localization and mapping systems, in which each component runs in a separate container. It relies on a series of open-source libraries and benchmarks, including evo-tools and timem.

A ROS2 Package for Dynamic Collision Avoidance Based on On-Board Proximity Sensors for Human-Robot Close Interaction

GSplines: Generalized Splines for Motion Optimization and Smoot Collision Avoidance

Rafael A. Rojas presented his open-source library to represent and formulate motion and trajectory planning problems with generalized splines and piece-wise polynomials. Considering that motion planners have waypoints as output, these points must be joined to form a trajectory. This work presents a library to obtain these trajectories with GSplines.

“A generalized spline is a piece-wise defined curve such that in each interval it is the linear combination of certain linearly independent functions”.

In contrast to ROS classes, the introducied GSplines class contains all the necessary elements to derive polynomial trajectories for a given set of waypoints. ROS classes define points and trajectories as nested structures. An example can be seen with the JointTrajectory and JointTrajectoryPoint classess (or messages):

JointTrajectory:

# The header is used to specify the coordinate frame and the reference time for

# the trajectory durations

std_msgs/Header header

# The names of the active joints in each trajectory point.

string[] joint_names

# Array of trajectory points.

JointTrajectoryPoint[] points

JointTrajectoryPoint:

# Single DOF joint positions for each joint relative to their "0" position.

float64[] positions

# The rate of change in position of each joint.

float64[] velocities

# Rate of change in velocity of each joint.

float64[] accelerations

# The torque or the force to be applied at each joint.

float64[] effort

# Desired time from the trajectory start to arrive at this trajectory point.

builtin_interfaces/Duration time_from_start

This array leads to inefficient memory layout and complicates the translation into the flat vector format that optimizers require. There is no clear interface for evaluation at arbitrary time instances, computation of derivatives, or time scaling.

To efficiently derive the trajectories for the given waypoints, the GSplines class represents:

- Solutions of the minimum-X trajectory passing through the given waypoints.

-

Representing Transformations:

- Derivatives

- Linear operations

- Linear scaling

That is, the class itself includes the derivative linear operations and linear scaling. The class’goal is to achieve the monoid property on the derivative, scaling and addition/scalar multiplication operations. These operations will become a monoid under the composition. The monoid gives nice properties to program. By implementing the polynomial basis solution of the trajectory, the GSpline class allows implementing optimal control.

For more info visit the repositorie’s Github, which includes the code, theoretical explanations, and link to the associated publications.

Nav2 Docking

Nav2 Docking Server is part of the Nav2 library, and it has its own dedicated repo. The Nav2 Docking Server can be used with arbitrary robots and docks for auto-docking. The plugins ChargingDock and NonChargingDock allows to implement parameters specific to your docking station on how to detect if the docking was succesful. The docking procedure seemed to be wrapped around a behavior tree. It can be set up to work within your own behavior tree. The docking steps are:

- Retrieve dock pose.

- Navigate to dock (if not within range).

- Use the docking pluging to detect the dock.

- Enter a vision-control loop to reach the docking pose.

- Exit the vision-control loop once the contact has been detected.

- Wait until chargin starts (if applicable) and return success.

The dock database is an external yaml in server config or in action request. The controller is nav2_graceful_controller. Further details on the docking API and dock configurations can be found on the server’s documentation.

Radar Tracks for Path Planning in the presence of Dynamic Obstacles

Obstacles in ROS 2 are typically represented by a stationary point in an occupancy grid, usually inflated by a user-defined radius and cost scaling factor. These points are updated frame-to-frame via ray tracing. A limitation on this approach is the difficulty on using obstacle dynamics.

Mesh navigation

The Mesh Navigation software bundle allows efficient robot navigation on 2D manifolds, which are represented in 3D as triangle meshes. It enables safe navigation in various complex outdoor environments by using a modularly extensible layered mesh map. Layers can be loaded as plugins representing specific geometric or semantic metrics of the terrain. This allows the incorporation of obstacles in these complex outdoor environments into path and motion motion planning.

Software and Tool Integration

Main Street Autonomy

The main product advertised by Main Street Autonomy was their sensor calibration “Calibration Anywhere”. This tool calibrates all lidar, radar, camera, IMU, and GPS/GNSS sensors in a single pass, providing both intrinsic and extrinsic parameters, along with time offsets between sensors—all without the need for checkerboards or targets. It is, definitely, a very interesting product. What impressed me most, however, was their vSLAM solution, which demonstrated impressively stable features even in challenging scenarios with highly uniform textures, as shown in the photo here.

CROSS: FreeCAD and ROS

CROSS is a FreeCAD workbench to generate robot description packages (xacro or URDF) for ROS.

Robotics Education and Community Resources

The defenitive guide to ROS Mobile Robotics

Steve Macenski introduced to us one of his last papers, a survey that answers to the following questions:

- What are his algorithm choices

- How do they work

- When should I use what and why

- What features exist?

- How do they compare computationally?

Foss Books

VM (Vicky) Brasseur presented to us her books:

- Forge Your Future with Open Source

- Business Success with Open Source. Some of the sections from this books are available, such as:

IEEE Robotics and Automation Practice (RA-P)

A new IEEE journal with less focus on theoretical contributions and more on applied research. Link to the journal here

Awesome conferences and schools list

A very much needed repository with links to interesting robotics conferences and schools, with a list going as far as 2028! Follow the link here.

Other Interesting tools

Happypose

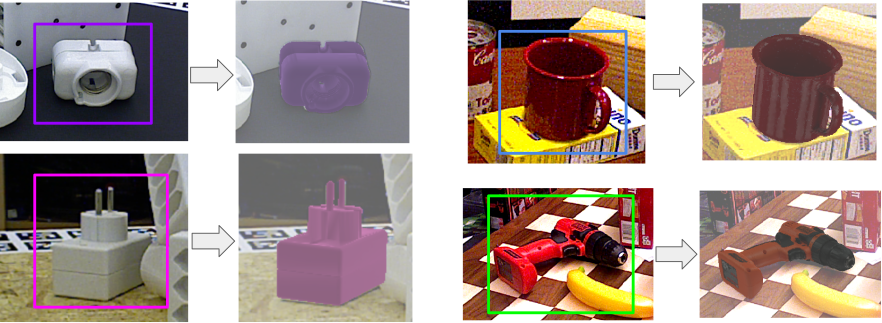

Krzysztof Wojciechowski presented happypose_ros, a ROS 2 wrapper of Happypose for 6D object pose estimation. Given an RGB image and a 2D bounding box of an object with known 3D model, the 6D pose estimator predicts the full 6D pose of the object with respect to the camera. As a sum up, Happypose introduces a library for:

- Single camera pose estimation.

- Multi camera pose estimation.

- 6D pose estimation of objects in the scene (for the pretrained objects). Easy Fine-tuning with different objects is currently a future work.

- ROS API. The slides on how the method works can be found here

Pssst, ey, look, we are women in robotics, wooo!

Pssst, ey, look, we are women in robotics, wooo!