Deep Learning Datasets in the COVID era

From 2020 I was expecting to collect a dataset for my Deep Learning PhD project, but instead, 2020 brought me having to keep a quarantine. This was quite frustrating because, how can I keep going with my project, if I can’t even make the first and more basic step?

Well, I guess that once again, the answer is in the internet. Aren’t there millions of pictures on the internet that I can use to make my own database? Well, sure there are. However, this idea arises some questions:

- Is there any tool that can help me with this? I could just google “cat pics” (just to keep going with the typical cat example) and download the ones that fit my needs, but that seems extremely time-consuming and ineffective.

- What about image rights? I’m not quite sure about the rights of using images for a dataset, so I’ll show you here my findings on this.

Gathering Images for your database

Here, I would go through the most interesting methods I found to gather Images from the internet (Google, Bing, and Flickr), to see which of them are suitable for creating a Machine Learning database.

From other Datasets

Imagenet

Imagenet database contains almost 15M images (at the date of this writing), labelled according to the nouns contained in the WordNet database. In their browser, you can look for significative words that are a synonym of the one you’ve written. Those words which are close (in meaning) between them, are grouped in synsets (synonym sets).

From Google

Google is the most popular search engine, so no wonder that there are different alternatives online to download pictures from it.

Making custom scripts

The first solution I found for this was from the great blog pyimagesearch (seriously, is there any computer vision problem for which this blog doesn’t already have a solution?). I won’t come into details since it is already clearly explained in the original post, but I’ll make a quick resume so you can get an idea of how much human effort this solution requires. It can be basically divided in three steps:

- Search the query term on Google images.

- Gather the URLs into a text file using the JavaScript console. You’ll scroll through the results, and the script will simulate clicking on them and copying the URL for you.

- Download the images from the URLs using a Python script.

This is indeed a very interesting solution. However, after doing all the programming, you still need to scroll through the results. It is not that much of a big deal, but it would require your time on something that could be automated.

To conclude:

![]() it is not straightforward to implement.

it is not straightforward to implement.

![]() it is not fully automated.

it is not fully automated.

![]() it doesn’t take into account the copyright of the pictures.

it doesn’t take into account the copyright of the pictures.

Google Image downloaders

I found a bunch of python libraries like this, or this one that are supposed to download pictures from Google Images with just a simple bash command or a shell script. However, they recently stopped working. The next workaround was a similar script, but this time for Bing. But just like the others, it no longer works.

They all launch the same error: the images are not downloadable. There are several Github issues around this problem, and it seems to be that the links created by the scripts no longer work because Google has recently changed the way they present the data (and I guess, same for Bing).

I’m sorry for the bad news, but I think this should be noted out to avoid you from losing time by trying to make these libraries work. But don’t worry, hereafter I’m showing you some working solutions!

Simple Image Download

This script

is pretty simple but is the only one that I found that works with Google up to date.

You basically define the Search query and the maximum number of pictures,

and it downloads them for you.

Cat picture without Copyright downloaded from Google. Picture by IRCat in Pixabay

Cat picture without Copyright downloaded from Google. Picture by IRCat in Pixabay

I tried it by downloading 200 pictures, and I’d say that up to this point this is the best solution, since it is very straightforward and it worked perfectly fine.

In conclusion:

![]() it’s simple and works well.

it’s simple and works well.

![]() but it doesn’t take into account the copyright of the pictures.

but it doesn’t take into account the copyright of the pictures.

Flickr Scraper

There’s this similar alternative to the Google Images scripts, but with Flickr. And yes, this one works!

Cat picture with no Copyright restrictions downloaded from Flickr.

Cat picture with no Copyright restrictions downloaded from Flickr.

You need a Flickr account in order to use it, and ask for permissions (non-commercial in my case). They also ask you for data about your “app”, and to agree the API terms of use. Nobody likes making more accounts and accepting more terms, and I agree with you in it being a headache, but I have to say that in this case it’s very convenient, because it will help us with the whole “rights of use” thing. I’ve read the terms (yes, I know), and by default the photos are owned by the user that uploaded them. If the photo is marked as “All Rights Reserved”, the photo cannot be copied or used in any way without permission. So if you want to use it, you must ask the owner for permission to do it. The Flickr API has its own terms, but it clearly says that it use must “comply with any other terms and conditions a user has attached to his or her photo”. Unfortunately, the Flickr Scraper doesn’t filter which rights the image has. However, since it is in the metadata of the image, surely there is a way to implement that.

So, in conclusion:

![]() FLickr Scraper works pretty easily and well.

FLickr Scraper works pretty easily and well.

![]() however, it requires an user account.

however, it requires an user account.

![]() and it doesn’t take into account the copyright of the pictures.

and it doesn’t take into account the copyright of the pictures.

Rights of use for ML Datasets

I’ve mentioned so far the (un)existence of filters for selecting the images to download according to their rights of use… but which rights of use do we need to use a picture in our dataset?

I want to emphasize that this debate doesn’t apply to the training of the algorithm: Copyright applies to copying data, so as long as the original images cannot be reconstructed from the algorithm, we’re covered. The problem arises when making this dataset publicly available.

Some datasets based in public images are Google’s Open Images, Microsoft’s COCO, ImageNet, etc. They save the URLs of the images that form the dataset, but this leads to an important data loss if the URL breaks. To note, Imagenet contains images licensed under CC by 2.0.

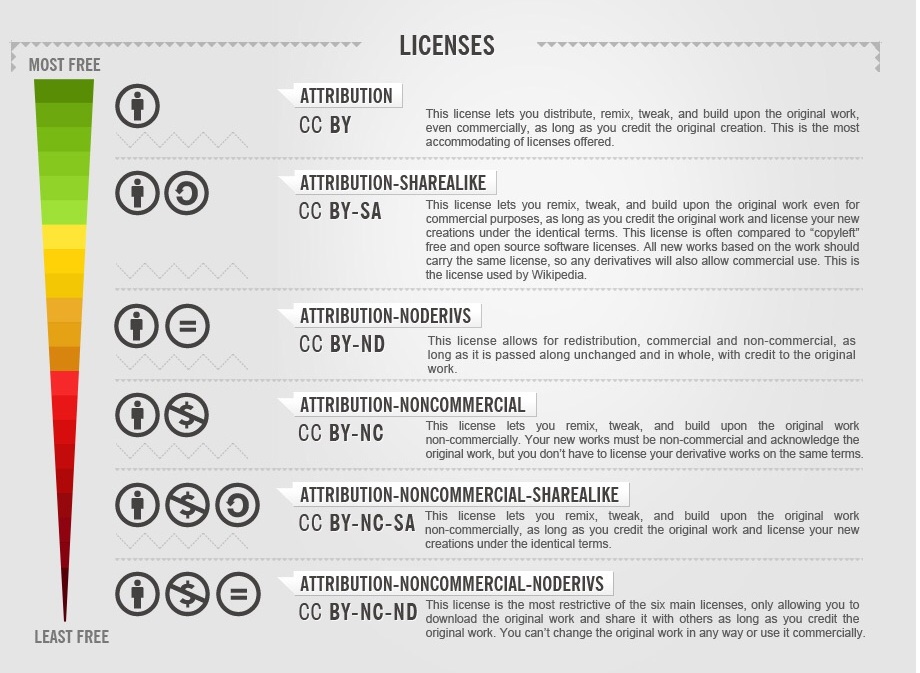

If you save a cached copy of the images, there is no problem if you use them internally, but in case of wanting to offer them as a public download, you must take the Copyright into account. You would have to use public domain data or data with a Creative Commons license. Here is a table that shows the different kinds of CC licenses:

The most restrictive CC license doesn’t allow to use the picture for commercial purposes and/or modifying it, and you need to credit the author, so, let’s put this into (my) situation:

![]() I’m a researcher, so no commercial use is being made.

I’m a researcher, so no commercial use is being made.

![]() I’m downloading the picture as is, so no modification is being

made. However,

I’m downloading the picture as is, so no modification is being

made. However, ![]() no data augmentation could be done with such pictures!

no data augmentation could be done with such pictures!

![]() If I keep the metadata of the picture, I’m keeping the attribution.

If I keep the metadata of the picture, I’m keeping the attribution.

Moreover, different countries offer different regulations for data mining and research purposes. The US and the UK consider data mining as fair use, while in the European Union we have different Articles on this issue, including the Copyright Final Compromise in Article 13. However, it is not very clear and it seems to talk more about the need of regulation on this, rather than on the regulation itself. Article 3 says that reproduction and extraction is allowed by “research organizations and cultural heritage institutions”, being a research organization a non-profit entity or a public institution. Article 3(2) says that saving and storing the data “for the purposes of scientific research, including for the verification of research results” is allowed, as long as the copies are saved in a secure environment and retained no longer than necessary to achieve the scientific research objectives. Finally, Article 4 allows to the owners to remove the exception by adding robot.txt metadata.

So, to resume, what I’ve understood so far is that:

![]() you can store any (copyrighted or not) data and use it

to train or verify your ML algorithms as long as it is not explicitly prohibited

by the owner in the metadata.

you can store any (copyrighted or not) data and use it

to train or verify your ML algorithms as long as it is not explicitly prohibited

by the owner in the metadata.

![]() you cannot redistribute (in this case, as a dataset) copyrighted data.

you cannot redistribute (in this case, as a dataset) copyrighted data.

![]() you can redistribute data under a CC license.

you can redistribute data under a CC license.

I’ve found many articles online with some opinions on this topic, but very few

about the facts on Copyright restrictions. Surely I’ll keep investigating and

updating this post with more facts on this issue. This is a personal interpretation of

all the information I could gather, so if you have any additional comments or

different interpretations, I would love to hear them from you! ![]()